Looking to the Future by Appreciating the Past

Posted on January 3, 2017

I’ve recently come to the end of an interesting and exciting journey of re-discovery which began on Thanksgiving 2016.

While waiting for Thanksgiving dinner to be ready, there was time to kill and we were all just sitting around waiting. My son, Glenn, is 11 and has become a wiz with Lego Mindstorm and I’ve been introducing electronics and programming to him a little here and there over the years. It had been a while since I did basic discrete electronics with him so I decided to go into the garage, dust off a parts box and to sit down with him to plug in LEDs and resisters to a breadboard. After reviewing Ohm’s law and some basics with him, he started pulling out servo’s and other parts, but it was dinner time so I put it all away.

It was clear he was ready for more and I hadn’t done any serious electronics in over a decade. Over the years, at MakerFair’s and other events I’d pick up Arduino’s or parts kits, but had failed to really get excited about eletronics in any serious way… finally, with the pressure of wanting to teach my son correctly I had a real internal drive to dust off my knowledge and start building things! At least, thats where it started.

That night I cleaned off my workbench and set out to re-prove Ohm’s and Kirchhoff’s laws to myself and re-learn serial circuit design. That gave way to parallel circuit design. That gave way to building more and more complex circuits from discrete components. When I got to transistors I realized that while I’d studied building gates out of transistors, I’d never actually done it, so I started building simple gates and testing them. Along the way I found that there were tools I needed and didn’t have for measuring, and thanks to my old stack of unused Arduino’s I was able to build several sensors and tools to help me with experiments and measurement.

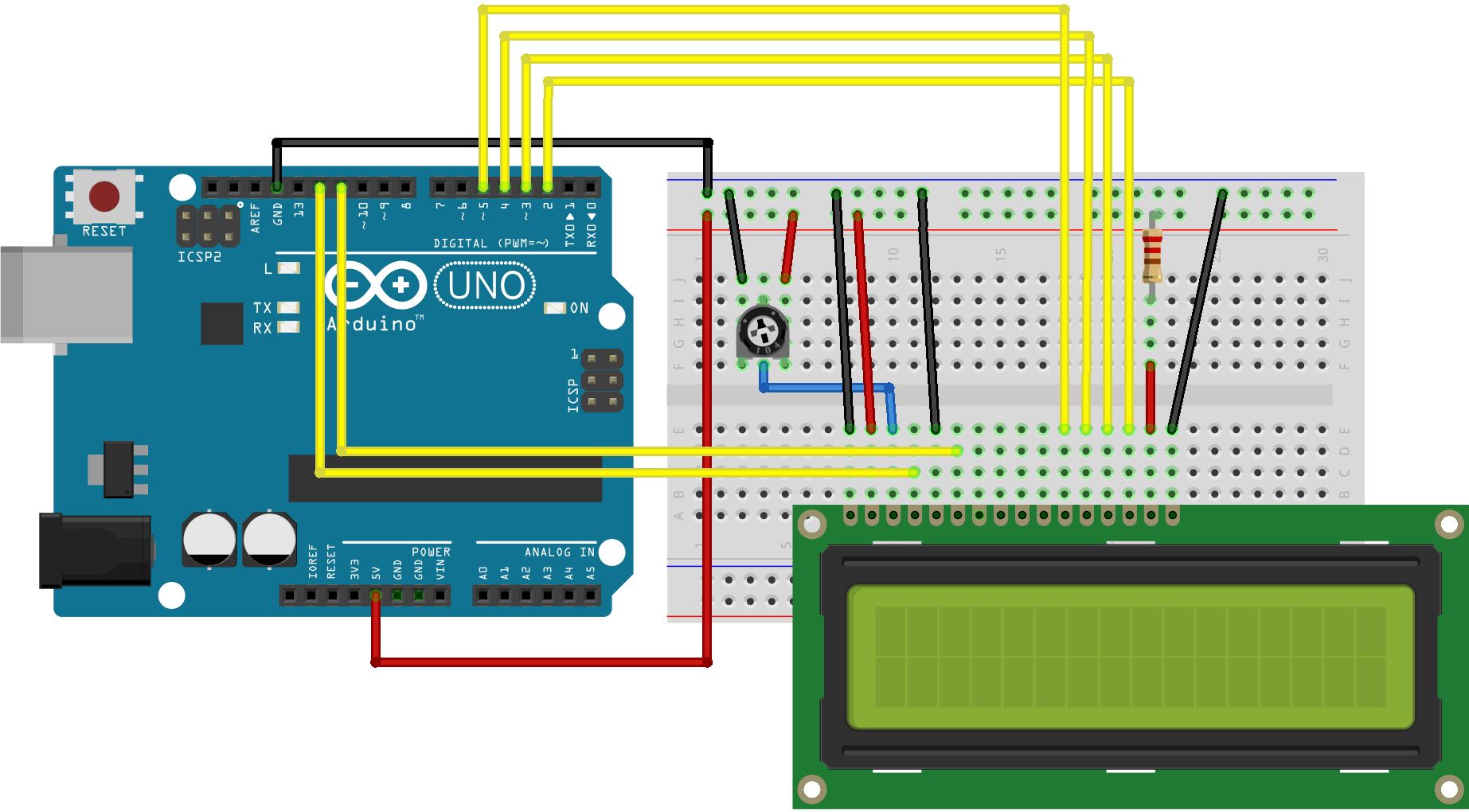

At that point I felt I had enough knowledge in the electronics built back up to start doing some interesting Arduino projects. When I dusted off an old LiquidCrystal LCD display I found something that intrigued me… there are a lot of pins on the LCD but its operation is really simple, 8 pins representing 8 data bits for programming, a read or write pin, a register pin, and an enable pin to lock in the values on the 8 data pins. It hit me like a ton of bricks that this wasn’t dissimilar from the way a computers data bus works. The operation is simple enough that you can program it by simply tapping in instructions via buttons on the pins. Suddenly all kinds of lights came on and I was excited to re-examine the basics of CPU’s with a new appreciation.

From there I started looking around at how I might go about building a simple 4 bit computer from the knowledge I’d acquired. When you consider the technology you can actually put your hands on and build on breadboards your talking about the early 1970’s, before the Intel 4004 changed things forever. I found a thriving community of TTL (Transistor-Transistor Logic) fans out there, which are really the most electronic minded equivalent of a home-brew computer community. I found tons of people who have built their own SAP (Simple as Possible) computers in 4 and 8 bit configurations. This gave me the ability to jump way ahead by studying their work rather than spending months learning through my own trial and error. Over a week I was becoming confident I could build my own machine… but then I thought ahead to the next problem, programming the machine.

There are several concepts that are explained to people about how computers work that aren’t incorrect but are over-simplifications which are ultimately misleading. One such thing is that data “flows” on a bus, which in a parallel data bus isn’t true, the bus is in a single state at any given time. Time is divided by the clock signal. Circuits connected to other circuits allowing voltage to flow in a certain configuration, then the clock steps the computer, now a new set of circuits is connected another set of circuits, then the clock steps. The pretty illustrations shown to people is that data is pulsing around in a constant flow, because its easy to comprehend, but it leads you astray. Once you understand flip-flops and latches, then tie them together on a bus, suddenly things start looking like a computer, and a very simple to comprehend one. You add 2 registers, an arithmetic logic unit (ALU), a little bit of memory, and you’re in business!

One thing I’ll note, people make a big deal of how “stupid” Bill Gates was for saying once that “640 kB ought to be enough for anybody”… in an 8 bit world, in computers this simple, he’s absolutely right. At this level, especially with small disk operating systems like CP/M or DOS, 640K was a ton of memory. More on that in a minute.

Once my thinking progressed to programming the unit, you suddenly notice some more interesting things. We commonly use hexadecimal when talking about hardware and we say “computers are binary”. Thats only partly true…. pins have a voltage or don’t, they are on or off. We then represent that in 1’s and 0’s, its a human abstraction. And when you start thinking of programming a 4 or 8 bit CPU by hand, you are apt to make a lot of mistakes writing 1 and 0, which makes alternate numbering systems like octal or hex much easier and less error prone means of recording instructions and addresses… but the reality is that octal and hexadecimal are yet further abstractions from the truth of on and off. Hex is a tool, nothing more, and a super handy one at that. Then when you start working out the instruction sequence for the computer, writing each sequence in pure binary or hex is confusing, so we come up with a simple mnemonic to represent each instruction or address….. and then you realize you just re-invented assembly!

And here’s where things get interesting. When you start with computers, then learn to program in (very) high level languages and then explore other languages, and eventually want to learn assembly, it seems impossibly hard and magical. This is, I think in hindsight, because assembly serves no real utility. When you debug programs, the debugger will show you registers and the assembly, and you can learn enough about it to make reasonable sense of it and utilize it, but only so as to get you back up to your high level languages again. When you instead approach assembly from the transistors up, assembly looks like the most heavenly convenience you’ve ever before seen. Then once you consider using C rather than assembly, you just believe how fortunate you are. In our modern age we’ve forgotten these things and we consider C a “low level language”, when it isn’t, it is a proper “high level language”, we’ve just gotten far to comfortable in our coddled modern languages.

There is a parallel here to whisky! I once heard a man in a DC Whisky bar say “I would never water down my Scotch, I only drink it straight!” But here’s the funny thing, Scotch comes out of a barrel after aging at 55-68% alcohol by volume (ABV), depending on its age. The minimum bottling strength allowed by law is 40% ABV. So how did your Macallan come to be 40%? The distillery added water till it was 40% ABV. Someone else did the dirty work of watering it down on your behalf so you could think your being tough. They also chill filter the whisky because if you add ice real whisky gets cloudy which people are afraid of, and they add E-150 caramel coloring because we’re brainwashed to think darker color means more flavor. In short, the manufacturer does many thing we consider un-natural and distasteful because we want something specific and we don’t want to know how its actually achieved.

Likewise, from an electrical point of view, a chip runs only one thing: instructions. Whether you write LISP or FORTRAN or C or Java or PERL or Python or Clojure or Rust or Go or JavaScript… no matter what language you use, the CPU only knows instructions, and your code, whether interpreted or compiled, ultimately is executed as machine instructions.

From this point of view, the normal geek pissing matches over programming languages is petty and stupid. You can boil languages down into 3 criteria: functional efficiency, developer efficiency, and machine efficiency. That is: How easy is it to move programs around and use them? How easy is it to write the program you need? How efficiently is the machine instructed to execute the program? The reason for the huge number of languages is simply sliding across these three axis.

Anyway, back to the story…

About this time, thinking of programming a simple computer, I came across the Altair 8800. I’d heard of this computer in the history books as the “First Personal Computer” but didn’t appreciate its significance.

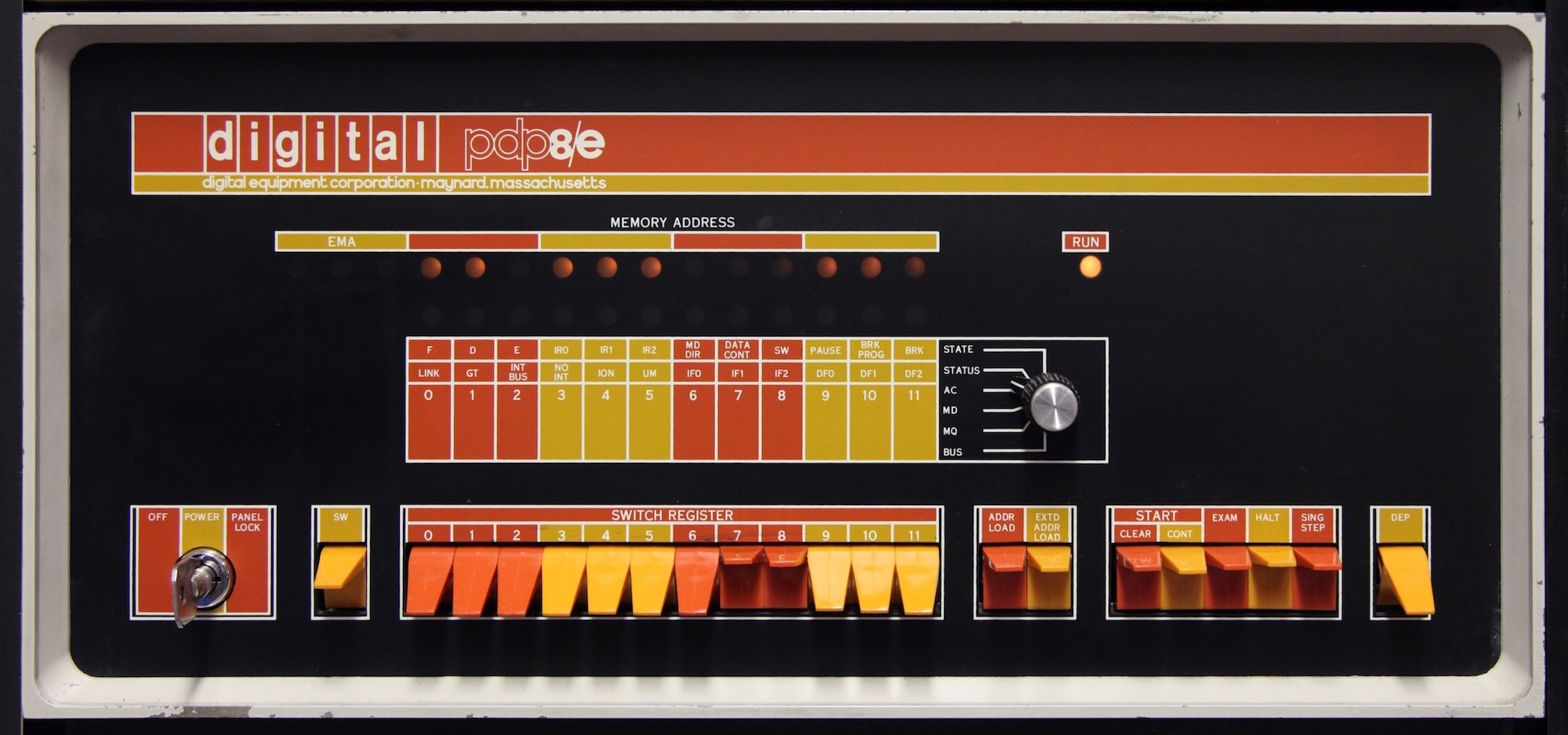

I was blown away! The Altair looks like a really polished version of the kind of computer I sketched out! Its operation is simple and straight forward. Turn it on, reset it, add your instructions, then address, then step, and repeat, when the codes are input flip the “run” switch and let it go! Beautiful! And, come to think of it, another famous computer looks shockingly similar, the DEC PDP computers!

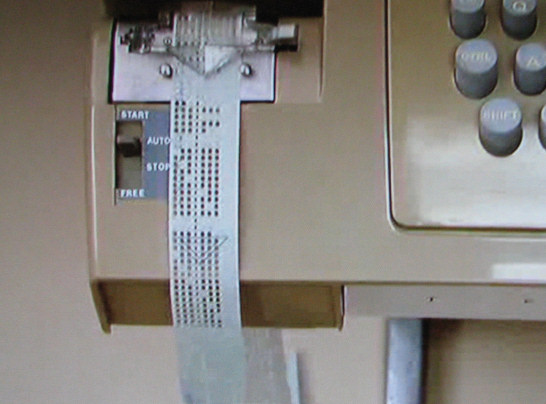

Suddenly the whole history of computing started to make sense in a way that it never really had before. Being able to work with computers at this very low and intimate level was a thrilling idea, and I don’t even need to build it myself! I learned all about how to “hand program” the Altair and PDP’s. The initial appeal wears off quickly when you have to insert more than a few dozen instructions. Wouldn’t it be nice if you could just feed the bits to the computer? Cards and paper tape!

So now you can turn on the computer, toggle in a couple instructions to allow the computer to read instructions from a teletype machine and then feed in a paper tape with pre-recorded instructions! This gives you a whole new appreciation for bootloaders! BIOS’s suddenly look like a wonderful and magical creation. Thank you Phoenix BIOS, sorry for hating you all these years for being crappy. From the perspective of a simple computer, a bit-mapped display is really painful. Teletypes make things much much easier. So I gained an appreciation of them.

This sent me on a serious history kick. I re-discovered the history of the creation of the transistor, the stories of the creation of Silicon Valley by Shockley who also turned out to be Silicon Valley’s first asshole, which led to the “Traitorous Eight” bootstrapping the Silicon Valley first at Fairchild and then founding Intel, and others. I learned the history of ENIAC, EDSAC, UNIVAC 1, then studied IBM’s first computer, the 701 and kept on looking at IBM machines through to the creation of the System/360. I studied the manuals of the DEC PDP’s and then VAX’s. I learned the history of CP/M and just what an asshole Bill Gates really was. I watched Douglas Engelbart’s 1968 “Mother of all demos” to see the explosion of innovation he unleashed, inspiring Xerox PARC to create the innovations that culminated in the Xerox Alto and then were again stolen by Steve Jobs to inspire the Macintosh. I looked at how OS’s developed from simple boot loaders into disk operating systems and then, with MIT’s Compatible Time-Sharing System (CTSS) something that resembles a modern operating system, which leads up to the design of UNIX and C. So on and so forther. I built a bridge back in time, from the history I was present for in the mid-1980’s back to the earliest electronic computers in the 1950’s.

I came to feel I understood these systems so well that I wanted to go see them again, with new and fresh appreciative eyes! I don’t have the Computer History Museum available to me since I moved to Seattle, but it turns out I have something better… The Living Computer Museum! I’d heard of it but never gotten around to visiting it. I was told that the computers there were actually running! What I didn’t realize, however, was that not only are they running, but you can sit down and use them! Here is a picture of me hacking on a PDP-8 using a real DEC VT-102 Terminal!

Visiting the Living Computer Museum was a real delight, not only did I get to appreciate all these great vintage machines, but I actually understood their operation and appreciated their design, and moreover, I could use them! I hacked on a Xerox Alto and told my wife all about Engelbart. Tamarah played Oregon Trail on an Apple II and I told her all about the Homebrew Computer Club that inspired the people who created the PC industry. We played with an Altair and I geeked out about how Federico Faggin created the microprocessor because of time pressure to deliver IC’s for Busicom and as a result produced the Intel 4004; and then we looked at about of Zilog Z80 PC’s which Faggin created when he left Intel. I recounted how Gary Kildall was a hero by creating CP/M and has been robbed of the celebrity he should have had. I programmed on PDP-8’s and PDP-11’s. I sat down at the control console of an IBM System 360 and actually understood what those thousands of LED really mean. It was an amazing opportunity to play with everything I had learned. (Even better, they are looking for volunteer help, so maybe I’ll spend a lot more time with my beloved PDP’s in the future.)

Along the way, I also spent time in and out of a variety of programming languages which I’d never actually appreciated before. In particular, Algol and Simula. I’ve worked with FORTRAN, COBOL and LISP in the past, but I’d never actually looked at Algol or Simula, just heard about them in history books. When you consider everything in its proper historical context, these languages are remarkable. Algol is the first programming language that actually looks like a “modern” language. Simula gives us a lot of the features of a modern language (objects, classes, garbage collection, etc.) I became sad that we don’t pay more attention to them today.

Another observation when studying the history of programming languages is that several key languages, namely FORTRAN and COBOL (still the backbone of Mainframe computing to this day, I’m not kidding) , and even LISP, were created with incredible haste. IBM’s first computer, the 701, was available in 1952 and relied on vacuum tubes. Grace Hopper worked on UNIVAC in 1951. John Mauchly created “Short Code” in 1949 which is what we’d call Assembly today. The first compiled language was “Autocode” for the Mark 1 computer in 1952. Now consider that FORTRAN was released in 1954, FLOW-MATIC was created by Grace Hopper in 1955 which became COBOL in 1959, John McCarthy created LISP in 1958, and the same year we get ALGOL. Simula comes along four years later in 1962. Jump forward to C in 1969. The point being, 3 pillar languages all first appear within 3 years of the first commercial computer and that was before IBM’s first Transitorized computer, the IBM 7000, appears in 1959. Just crazy.

….but I digress.

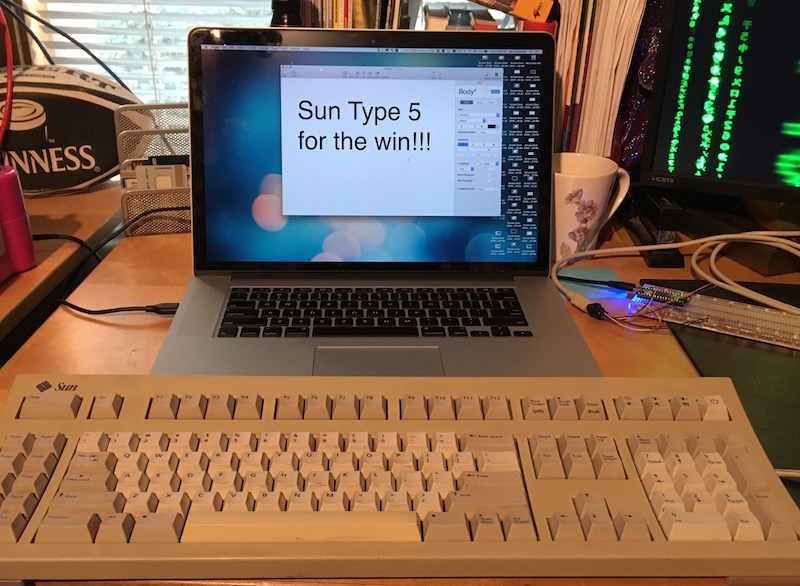

To celebrate the end of my exciting journey back and forth through the history of computing and my new found deep appreciation for all the wonderful things we have, I decided to complete a long time desire of mine to create a simple and affordable USB keyboard adapter for the Sun Type 5 keyboard, which I did on New Years Day. You can find the build instructions and download the Arduino code here: Github benr/SunType5_ArduinoAdapter.

And now my journey is at an end. At least, this leg of it. After spending several years focused on management and business theory, taking the occasional dive into new technologies, it was really nice to lose myself to electronics and ultimately that road led me back to the present wiser for the ride. I’m no longer intimidated by assembly nor do I revere it as some mystical art known only to a few geniuses. I’m less religious about programming languages and operating systems. I’m more appreciative of the X86-64 instruction set and not as naive about the various instruction sets (hint: RISC isn’t automatically superior to CISC). And I was reminded of old truths, namely that the best way to learn is to teach.

The new year has come and its time to go back to work. In the year to come I plan to expand on my renewed interest in electronics. I’ve amassed a pile of new microcontrollers and Systems on a Chip, including several ESP6288’s, a couple new Arduino’s and even Intel’s Galileo and Edison platforms. I’ve inadvertently inserted myself into the IoT craze that I recall Jason Hoffman getting excited about at Joyent back in 2008. Most of all, my desire to build my own computer drove me right into FPGA’s, which I’m looking forward to start playing with shortly. Only time will tell if I can hold onto the fire or if day-to-day work will draw my interest back to more practical matters. For now, I’m having a ball.